MrSub

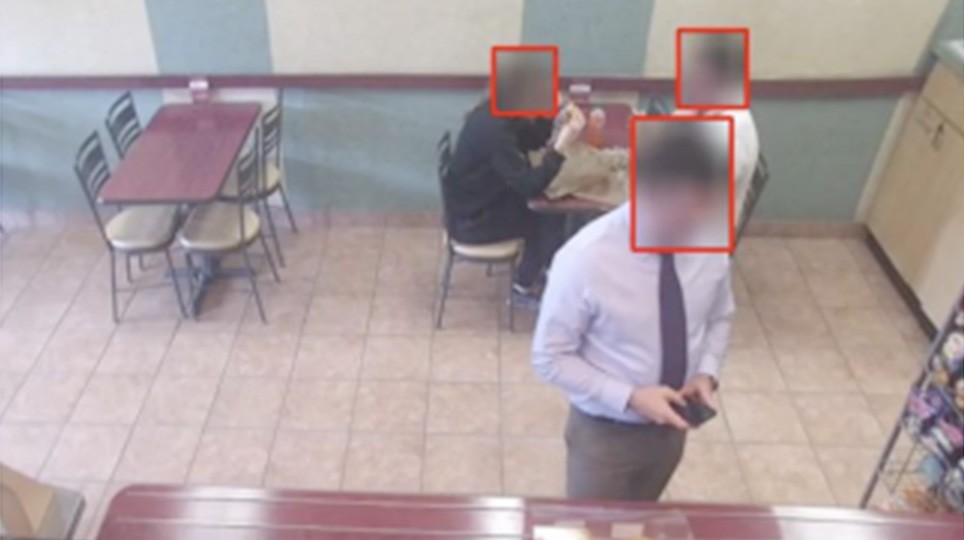

MrSub is a dataset of surveillance images "captured from two front-facing surveillance cameras installed behind the left and right side of the counter of a general fast food restaurant. Images were captured at 2 frames/minute for a period of 10 hours per day over several days." 1

"Compared to Brainwash, MrSub mainly includes larger head instances with little occlusions." 1 But like Brainwash, the MrSub dataset was captured in an unconstrained environment without participation or consent of the restaurant customers. MrSub appears to an obscure dataset that may have only been used in limited research. The dataset authors mention it is not publicly available. But it is still notable because of the way images were collected.

The only information available on MrSub so far is from the author's original paper Deep People Detection: A Comparative Study of SSD and LSTM-decoder.

Citing This Work

If you reference or use any data from the Exposing.ai project, cite our original research as follows:

@online{Exposing.ai,

author = {Harvey, Adam},

title = {Exposing.ai},

year = 2021,

url = {https://exposing.ai},

urldate = {2021-01-01}

}

If you reference or use any data from MrSub cite the author's work:

@article{Rahman2018DeepPD,

author = "Rahman, Md. Atiqur and Kapoor, Prince and Lagani{\`e}re, Robert and Laroche, Daniel and Zhu, Changyun and Xu, Xiaoyin and Ors, Ali Osman",

title = "Deep People Detection: A Comparative Study of SSD and LSTM-decoder",

journal = "2018 15th Conference on Computer and Robot Vision (CRV)",

year = "2018",

pages = "305-312"

}