Duke Multi-Target Multi-Camera Tracking Dataset

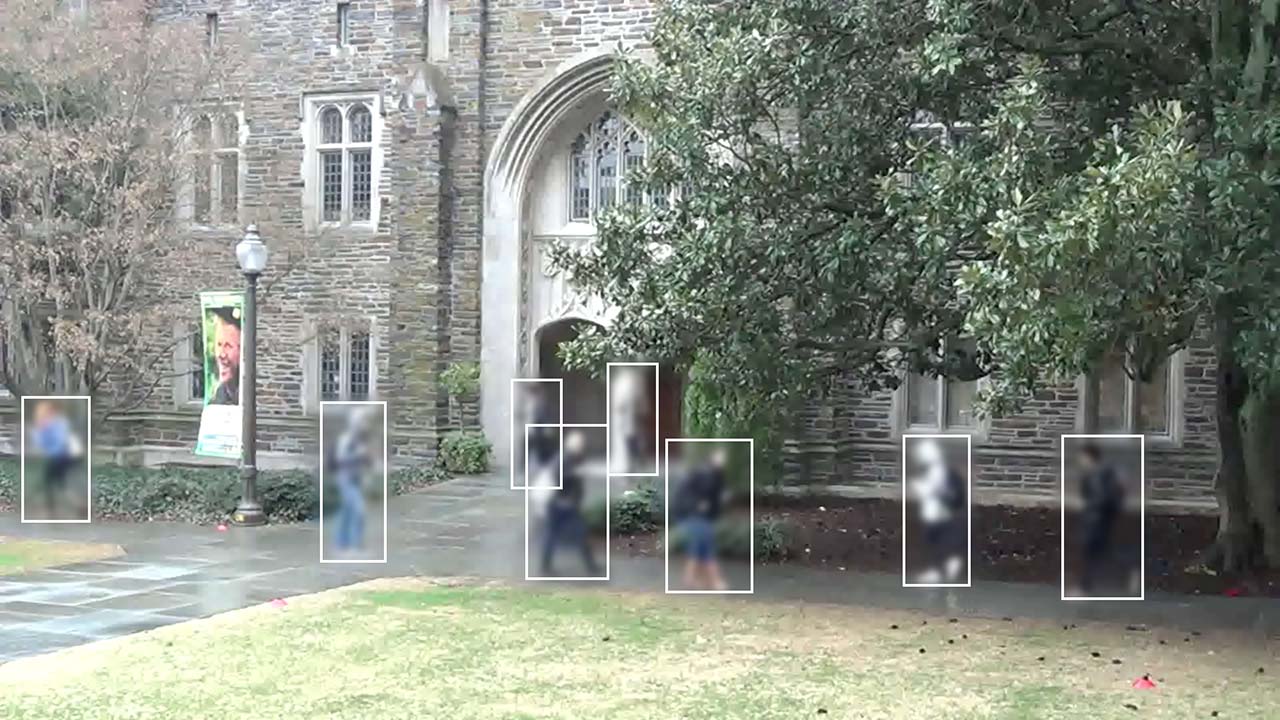

Duke MTMC (Multi-Target, Multi-Camera) is a dataset of surveillance video footage taken on Duke University's campus in 2014 and is used for research and development of video tracking systems, person re-identification, and low-resolution facial recognition.

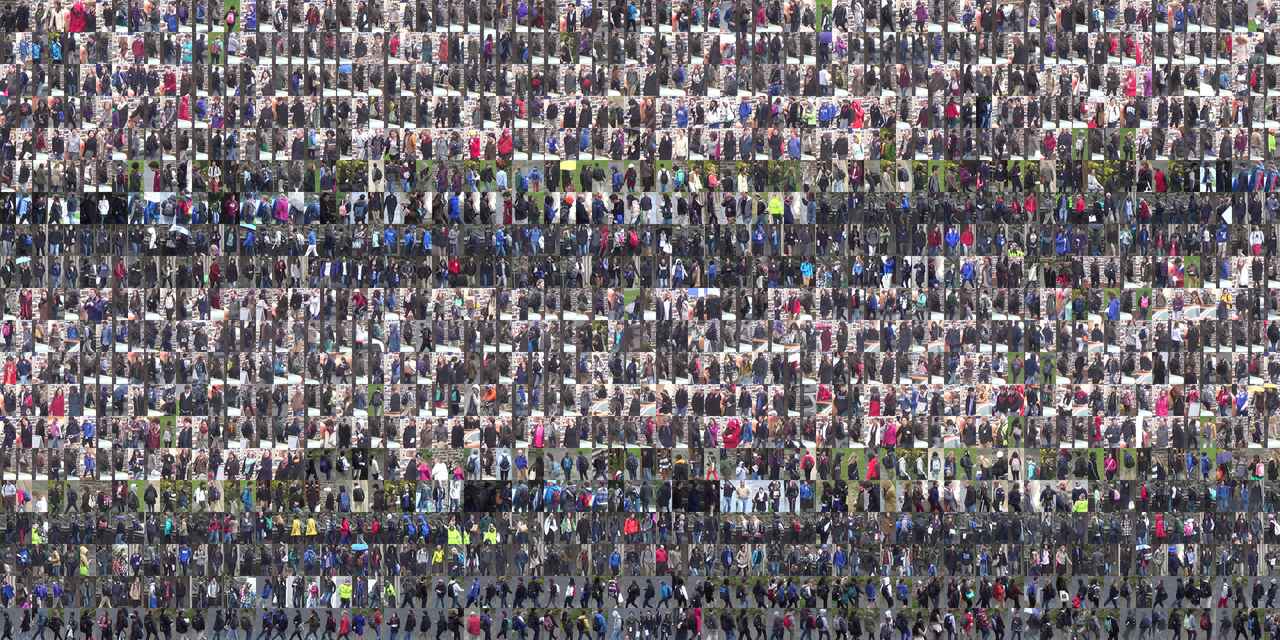

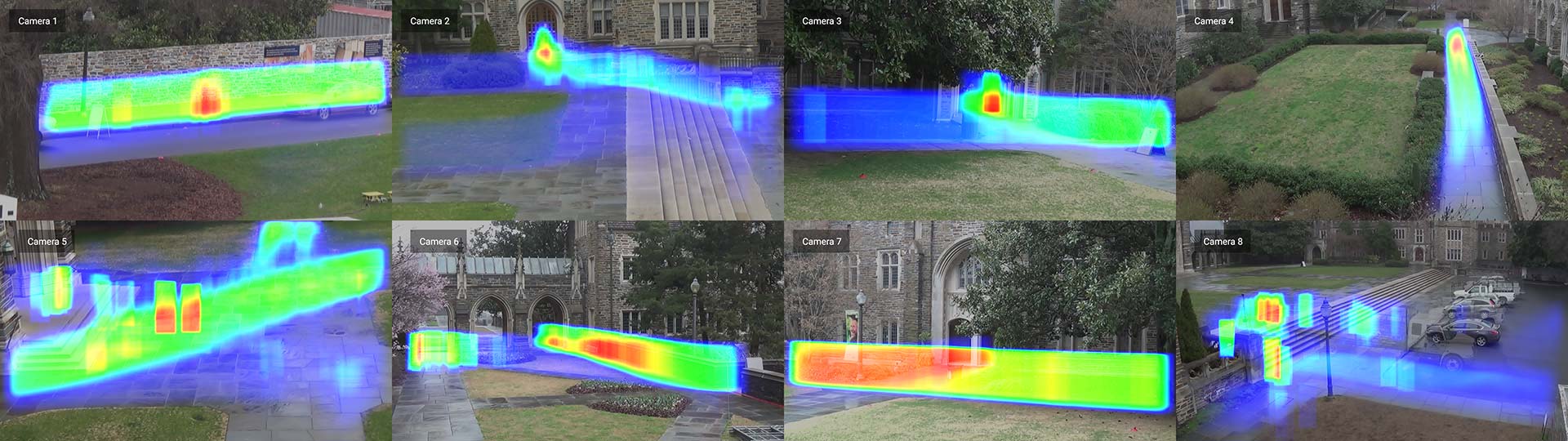

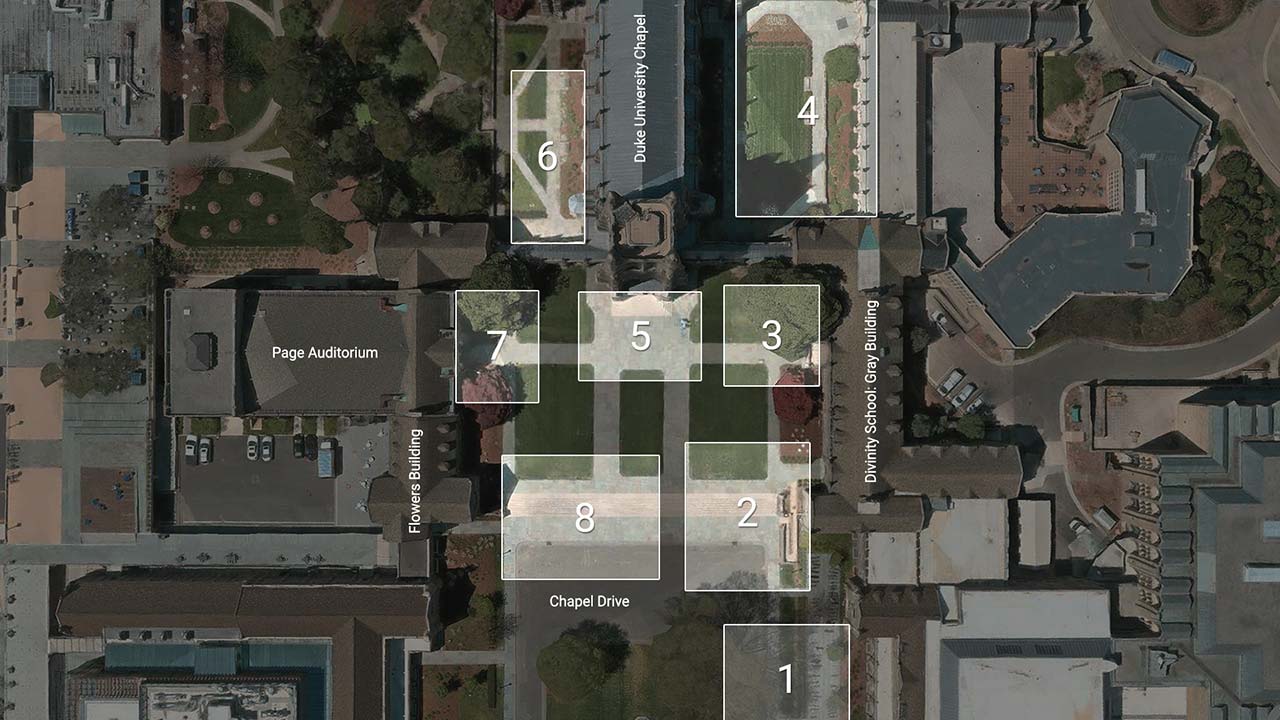

The dataset contains over 14 hours of synchronized surveillance video from 8 cameras at 1080p and 60 FPS, with over 2 million frames of 2,000 students walking to and from classes. The 8 surveillance cameras deployed on campus were specifically setup to capture students "during periods between lectures, when pedestrian traffic is heavy". 1

For this analysis of the Duke MTMC dataset over 100 publicly available research papers that used the dataset were analyzed to find out who's using the dataset and where it's being used. The results show that the Duke MTMC dataset has spread far beyond its origins and intentions in academic research projects at Duke University. Since its publication in 2016, more than twice as many research citations originated in China as in the United States. Among these citations were papers links to the Chinese military and several of the companies known to provide Chinese authorities with the oppressive surveillance technology used to monitor millions of Uighur Muslims.

Update May 2019: In response to this report and an investigation by the Financial Times, Duke University has terminated the Duke MTMC dataset.

In one 2018 paper jointly published by researchers from SenseNets and SenseTime (and funded by SenseTime Group Limited) entitled Attention-Aware Compositional Network for Person Re-identification, the Duke MTMC dataset was used for "extensive experiments" on improving person re-identification across multiple surveillance cameras with important applications in suspect tracking. Both SenseNets and SenseTime have been linked to the providing surveillance technology to monitor Uighur Muslims in China. 4 2 3

Despite repeated warnings by Human Rights Watch that the authoritarian surveillance used in China represents a humanitarian crisis, researchers at Duke University continued to provide open access to their dataset for anyone to use for any project. As the surveillance crisis in China grew, so did the number of citations with links to organizations complicit in the crisis. In 2018 alone there were over 90 research projects happening in China that publicly acknowledged using the Duke MTMC dataset. Amongst these were projects from CloudWalk, Hikvision, Megvii (Face++), SenseNets, SenseTime, Beihang University, China's National University of Defense Technology, and the PLA's Army Engineering University.

| Organization | Paper | Link | Year | Used Duke MTMC |

|---|---|---|---|---|

| Army Engineering University of PLA | Ensemble Feature for Person Re-Identification | arxiv.org | 2019 | ✔ |

| Beihang University | Orientation-Guided Similarity Learning for Person Re-identification | ieee.org | 2018 | ✔ |

| Beihang University | Online Inter-Camera Trajectory Association Exploiting Person Re-Identification and Camera Topology | acm.org | 2018 | ✔ |

| CloudWalk | CloudWalk re-identification technology extends facial biometric tracking with improved accuracy | BiometricUpdate.com | 2019 | ✔ |

| CloudWalk | Horizontal Pyramid Matching for Person Re-identification | arxiv.org | 2018 | ✔ |

| Hikvision | Learning Incremental Triplet Margin for Person Re-identification | arxiv.org | 2018 | ✔ |

| Megvii (Face++) | Person Re-Identification (slides) | github.io | 2017 | ✔ |

| Megvii (Face++) | Multi-Target, Multi-Camera Tracking by Hierarchical Clustering: Recent Progress on DukeMTMC Project | SemanticScholar | 2018 | ✔ |

| Megvii (Face++) | SCPNet: Spatial-Channel Parallelism Network for Joint Holistic and Partial PersonRe-Identification | arxiv.org | 2018 | ✔ |

| National University of Defense Technology | Tracking by Animation: Unsupervised Learning of Multi-Object Attentive Trackers | SemanticScholar.org | 2018 | ✔ |

| National University of Defense Technology | Unsupervised Multi-Object Detection for Video Surveillance Using Memory-Based Recurrent Attention Networks | SemanticScholar.org | 2018 | ✔ |

| SenseNets, SenseTime | Attention-Aware Compositional Network for Person Re-identification | SemanticScholar | 2018 | ✔ |

| SenseTime | End-to-End Deep Kronecker-Product Matching for Person Re-identification | thcvf.com | 2018 | ✔ |

The reasons that companies in China use the Duke MTMC dataset for research are technically no different than the reasons it is used in the United States and Europe. In fact, the original creators of the dataset published a follow up report in 2017 titled "Tracking Social Groups Within and Across Cameras" with specific applications to "automated analysis of crowds and social gatherings for surveillance and security applications". Their work, as well as the creation of the original dataset in 2014 were both supported in part by the United States Army Research Laboratory.

Citations from the United States and Europe show a similar trend to that in China, including publicly acknowledged and verified usage of the Duke MTMC dataset supported or carried out by the United States Department of Homeland Security, IARPA, IBM, Microsoft (who has provided surveillance to ICE), and Vision Semantics (who has worked with the UK Ministry of Defence). One paper is even jointly published by researchers affiliated with both the University College of London and the National University of Defense Technology in China.

| Organization | Paper | Link | Year | Used Duke MTMC |

|---|---|---|---|---|

| IARPA, IBM | Horizontal Pyramid Matching for Person Re-identification | arxiv.org | 2018 | ✔ |

| Microsoft | ReXCam: Resource-Efficient, Cross-CameraVideo Analytics at Enterprise Scale | arxiv.org | 2018 | ✔ |

| Microsoft | Scaling Video Analytics Systems to Large Camera Deployments | arxiv.org | 2018 | ✔ |

| University College of London | Unsupervised Multi-Object Detection for Video Surveillance Using Memory-Based RecurrentAttention Networks | SemanticScholar.org | 2018 | ✔ |

| US Dept. of Homeland Security | Re-Identification with Consistent Attentive Siamese Networks | arxiv.org | 2019 | ✔ |

| Vision Semantics Ltd. | Unsupervised Person Re-identification by Deep Learning Tracklet Association | arxiv.org | 2018 | ✔ |

By some metrics the dataset is considered a huge success. It is regarded as highly influential research and has contributed to hundreds, if not thousands, of projects to advance artificial intelligence for person tracking and monitoring. All the above citations, regardless of which country is using it, align perfectly with the original intent of the Duke MTMC dataset: "to accelerate advances in multi-target multi-camera tracking".

The same logic applies for all the new extensions of the Duke MTMC dataset including Duke MTMC Re-ID, Duke MTMC Video Re-ID, Duke MTMC Groups, and Duke MTMC Attribute. And it also applies to all the new specialized datasets that will be created from Duke MTMC, such as the low-resolution face recognition dataset called QMUL-SurvFace, which was funded in part by SeeQuestor, a computer vision provider to law enforcement agencies including Scotland Yards and Queensland Police. From the perspective of academic researchers, security contractors, and defense agencies using these datasets to advance their organization's work, Duke MTMC provides significant value regardless of who else is using it, so long as it advances their own interests in artificial intelligence.

But this perspective comes at significant cost to civil rights, human rights, and privacy. The creation and distribution of the Duke MTMC dataset illustrates an egregious prioritization of surveillance technologies over individual rights, where the simple act of going to class or a place of worship (students were filmed going into the university's chapel) could implicate your face in a surveillance training dataset, perhaps even used by foreign defense agencies.

For the approximately 2,000 students in Duke MTMC dataset there may be no escape. It's not impossible to remove oneself from all copies of the dataset downloaded around the world. Instead, over 2,000 students and visitors who happened to be walking to class in 2014 will forever remain in all downloaded copies of the Duke MTMC dataset and all its extensions, contributing to a global supply chain of data that powers governmental and commercial expansion of biometric surveillance technologies.

Updates

- June 2, 2019: Duke University seems to have shutdown the Duke MTMC dataset project

- June 2, 2019: A computer vision surveillance workshop (https://reid-mct.github.io/2019/) using the Duke MTMC dataset has been cancelled. "Due to some unforeseen circumstances, the test data has not been available. The multi-target multi-camera tracking and person re-identification challenge is cancelled. We sincerely apologize for any inconvenience caused."

Information Supply Chain

To help understand how Duke MTMC Dataset has been used around the world by commercial, military, and academic organizations; existing publicly available research citing Duke Multi-Target, Multi-Camera Tracking Project was collected, verified, and geocoded to show how AI training data has proliferated around the world. Click on the markers to reveal research projects at that location.

- Academic

- Commercial

- Military / Government

Video Timestamps

The video timestamps contain the likely, but not yet confirmed, date and times the video recorded. Because the video timestamps align with the start and stop time sync data provided by the researchers, it at least confirms the relative timing. The precipitous weather on March 14, 2014 in Durham, North Carolina supports, but does not confirm, that this day is the likely capture date.

| Camera | Date | Start | End |

|---|---|---|---|

| Camera 1 | March 14, 2014 | 4:14PM | 5:43PM |

| Camera 2 | March 14, 2014 | 4:13PM | 4:43PM |

| Camera 3 | March 14, 2014 | 4:20PM | 5:48PM |

| Camera 4 | March 14, 2014 | 4:21PM | 5:54PM |

| Camera | Date | Start | End |

|---|---|---|---|

| Camera 5 | March 14, 2014 | 4:12PM | 5:43PM |

| Camera 6 | March 14, 2014 | 4:18PM | 5:43PM |

| Camera 7 | March 14, 2014 | 4:16PM | 5:40PM |

| Camera 8 | March 14, 2014 | 4:25PM | 5:42PM |

Annotation Metadata

The original Duke MTMC dataset paper mentions 2,700 identities, but their ground truth file only lists annotations for 1,812, and their own research typically mentions 2,000. For this writeup we used 2,000 to describe the approximate number of students.

Ethics

Please direct any questions about the ethics of the dataset to Duke University's Institutional Ethics & Compliance Office using the number at the bottom of the page.

Updates

- Following the removal of Duke MTMC dataset by its original authors, it continues to be used, even for commercial research. A Oct 2019 paper from NEC cites using Duke MTMC dataset in their research on video person reidentification https://www.groundai.com/project/video-person-re-identification-using-learned-clip-similarity-aggregation/

Citing This Work

If you reference or use any data from the Exposing.ai project, cite our original research as follows:

@online{Exposing.ai,

author = {Harvey, Adam},

title = {Exposing.ai},

year = 2021,

url = {https://exposing.ai},

urldate = {2021-01-01}

}

If you reference or use any data from Duke MTMC cite the author's work:

@inproceedings{Ristani2016PerformanceMA,

author = "Ristani, Ergys and Solera, Francesco and Zou, Roger S. and Cucchiara, R. and Tomasi, Carlo",

title = "Performance Measures and a Data Set for Multi-target, Multi-camera Tracking",

booktitle = "ECCV Workshops",

year = "2016"

}

References

- 1 aErgys Ristani, et al. "Performance Measures and a Data Set for Multi-target, Multi-camera Tracking". (2016):

- 2 ahttps://qz.com/1248493/sensetime-the-billion-dollar-alibaba-backed-ai-company-thats-quietly-watching-everyone-in-china/

- 3 ahttps://foreignpolicy.com/2019/03/19/962492-orwell-china-socialcredit-surveillance/

- 4 aMozur, Paul. "One Month, 500,000 Face Scans: How China Is Using A.I. to Profile a Minority". https://www.nytimes.com/2019/04/14/technology/china-surveillance-artificial-intelligence-racial-profiling.html. April 14, 2019.